Artificial Intelligence (AI) is often regarded as the modern-day equivalent of electricity, powering countless human interactions daily. However, startups and developing nations face a clear disadvantage as Big Tech companies and richer nations dominate the field, especially when it comes to two critical areas: training datasets and computational power.

The global regulatory landscape for AI is highly complex and fragmented along the lines of varied regulations and collaborations between stakeholders in both the private and public sectors. This complexity is further exacerbated by the need to harmonize regulatory frameworks and standards across international borders.

The regulations governing fair use of AI training datasets differ across regions. For instance, the European Union’s AI Act prohibits the use of copyrighted materials for training AI models without explicit authorization from rights holders. Conversely, Japan’s Text and Data Mining (TDM) law permits the use of copyrighted data for AI model training, without distinguishing between legally and illegally accessed materials. In contrast, China has introduced several principles and regulations to govern the use of AI training datasets that are more in line with the EU in that they require the training data to be lawfully obtained. However, those regulations only target AI services accessible to the general public and exclude those developed and used by enterprises and research institutions.

The regulatory environment often shapes a startup’s trajectory, significantly influencing its ability to innovate and scale. An AI startup focused on training models—whether in the pre-training or post-training phase—will encounter varying regulatory challenges that could affect its long-term success, depending on the region in which it operates. For example, a startup in Japan would have an advantage over one in the EU when it comes to crawling internet data that is copyrighted and using it for training powerful AI models because it would be protected by Japan’s TDM law. Given that AI technologies transcend national borders, this necessitates collaborative, cross-border solutions, and global cooperation among key stakeholders.

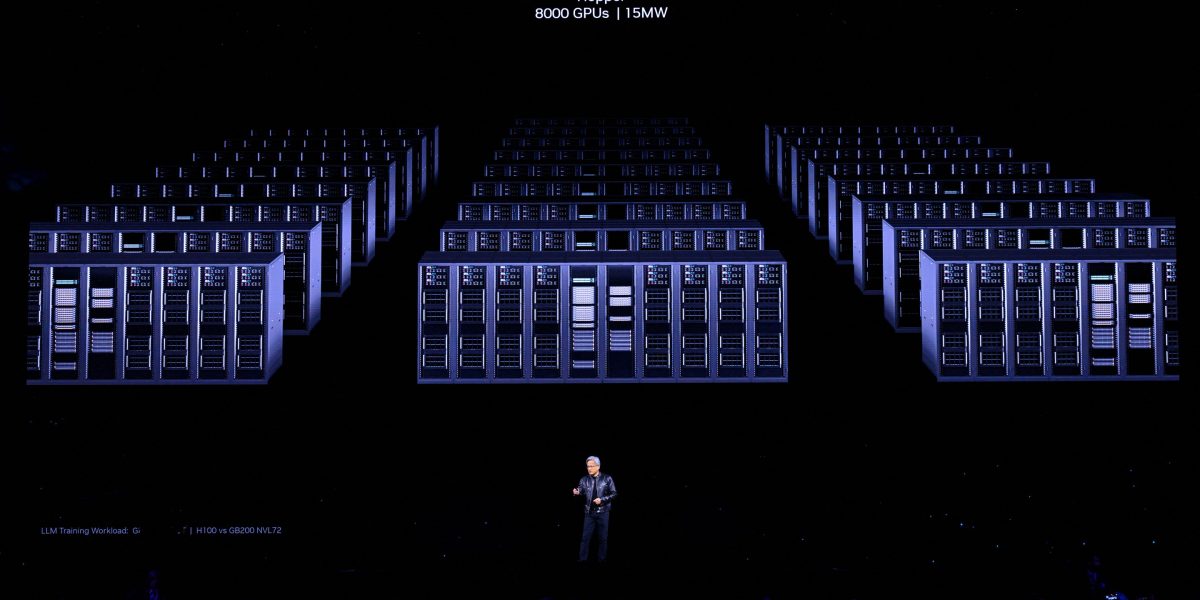

In terms of computational power, a significant disparity exists between large players—whether state-owned or private entities—and startups. Bigger tech companies and state entities have the resources to buy and hoard computational power that would support their future AI development goals, whereas smaller players that do not have those resources depend on the bigger players for AI training and inference infrastructure. The supply chain issues surrounding compute resources have intensified this gap, which is even more pronounced in the global South. For example, out of the top 100 high-performance computing (HPC) clusters in the world capable of training large AI models, not one is hosted in a developing country.

In October 2023, the UN’s High-Level Advisory Body (HLAB) on AI was formed as part of the UN Secretary-General’s Roadmap for Digital Cooperation, and designed to offer UN member states analysis and recommendations for the international governance of AI. The group is made up of 39 people with diverse backgrounds (by geography, gender, age, and discipline), spanning government, civil society, the private sector, and academia to ensure recommendations for AI governance are both fair and inclusive.

As part of this process, we conducted interviews with experts from startups and small-to-medium enterprises (SMEs) to explore the challenges they face in relation to AI training datasets. Their feedback underscored the importance of a neutral, international body, such as the United Nations, in overseeing the international governance of AI.

The HLAB’s recommendations on AI training dataset standards, covering both pre-training and post-training, are detailed in the new report Governing AI for Humanity and include the following:

- Establishing a global marketplace for the exchange of anonymized data that standardizes data-related definitions, principles for global governance of AI training data and AI training data provenance, and transparent, rights-based accountability. This includes introducing data stewardship and exchange processes and standards.

- Promoting data commons that incentivize the curation of underrepresented or missing data.

- Ensuring interoperability for international data access.

- Creating mechanisms to compensate data creators in a rights-respecting manner.

To address the compute gap, the HLAB proposes the following recommendations:

- Developing a network for capacity building under common-benefit frameworks to ensure equitable distribution of AI’s benefits.

- Establishing a global fund to support access to computational resources for researchers and developers aiming to apply AI to local public interest use cases.

International governance of AI, particularly of training datasets and computational power, is crucial for startups and developing nations. It provides a robust framework for accessing essential resources and fosters international cooperation, positioning startups to innovate and scale responsibly in the global AI landscape.

More must-read commentary published by Fortune:

The opinions expressed in Fortune.com commentary pieces are solely the views of their authors and do not necessarily reflect the opinions and beliefs of Fortune.